from rich import inspect

from datasets.features import featuresUsing 🤗 datasets for image search

tl;dr it’s really easy to use the huggingface datasets library to create an image search application but it might not be suitable for sharing. update an updated version of this post is on the 🤗 blog!

🤗 Datasets is a library for easily accessing and sharing datasets, and evaluation metrics for Natural Language Processing (NLP), computer vision, and audio tasks. source

When datasets was first launched it was more usually associated with text data and nlp. However, datasets has got support for images. In particular there is now a datasets feature type for images{% fn %}. In this blog post I try and play around with this new datatype, in combination with some other nice features of the library to make an image search app.

To start lets take a look at the image feature. We can use the wonderful rich libary to poke around python objects (functions, classes etc.)

inspect(features.Image, help=True)╭──────────────────── <class 'datasets.features.image.Image'> ─────────────────────╮ │ def Image(id: Union[str, NoneType] = None) -> None: │ │ │ │ Image feature to read image data from an image file. │ │ │ │ Input: The Image feature accepts as input: │ │ - A :obj:`str`: Absolute path to the image file (i.e. random access is allowed). │ │ - A :obj:`dict` with the keys: │ │ │ │ - path: String with relative path of the image file to the archive file. │ │ - bytes: Bytes of the image file. │ │ │ │ This is useful for archived files with sequential access. │ │ │ │ - An :obj:`np.ndarray`: NumPy array representing an image. │ │ - A :obj:`PIL.Image.Image`: PIL image object. │ │ │ │ dtype = 'dict' │ │ id = None │ │ pa_type = None │ ╰──────────────────────────────────────────────────────────────────────────────────╯

We can see there a few different ways in which we can pass in our images. We’ll come back to this in a little while.

A really nice feature of the datasets library (beyond the functionality for processing data, memory mapping etc.) is that you get some nice things for free. One of these is the ability to add a faiss index. faiss is a “library for efficient similarity search and clustering of dense vectors”.

The datasets docs show and example of using faiss for text retrieval. What I’m curious about doing is using the faiss index to search for images. This can be super useful for a number of reasons but also comes with some potential issues.

The dataset: “Digitised Books - Images identified as Embellishments. c. 1510 - c. 1900. JPG”

This is a dataset of images which have been pulled from a collection of digitised books from the British Library. These images come from books across a wide time period and from a broad range of domains. These images were extracted using information in the OCR output for each book. As a result it’s known which book the images came from but not necessarily anything else about that image i.e. what it is of.

Some attempts to help overcome this have included uploading the images to flickr. This allows people to tag the images or put them into various different categories.

There have also been projects to tag the dataset using machine learning. This work already makes it possible to search by tags but we might want a ‘richer’ ability to search. For this particular experiment I will work with a subset of the collections which contain “embellishments”. This dataset is a bit smaller so will be better for experimenting with. We can get the data from the BL repository: https://doi.org/10.21250/db17

# hide_output

!aria2c -x8 -o dig19cbooks-embellishments.zip "https://bl.iro.bl.uk/downloads/ba1d1d12-b1bd-4a43-9696-7b29b56cdd20?locale=en"01/12 20:18:42 [NOTICE] Downloading 1 item(s) 01/12 20:18:42 [NOTICE] Removed the defunct control file /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip.aria2 because the download file /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip doesn't exist. 01/12 20:18:42 [NOTICE] Allocating disk space. Use --file-allocation=none to disable it. See --file-allocation option in man page for more details. *** Download Progress Summary as of Wed Jan 12 20:19:43 2022 *** 58s](98%)]m =============================================================================== [#f38a5d 553MiB/43GiB(1%) CN:5 DL:36MiB ETA:19m55s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:20:44 2022 *** 46s] =============================================================================== [#f38a5d 2.6GiB/43GiB(6%) CN:5 DL:37MiB ETA:18m38s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:21:45 2022 *** m1s]m =============================================================================== [#f38a5d 4.7GiB/43GiB(10%) CN:5 DL:36MiB ETA:18m8s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:22:45 2022 *** m46s] =============================================================================== [#f38a5d 6.7GiB/43GiB(15%) CN:5 DL:31MiB ETA:19m38s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:23:46 2022 *** m23s] =============================================================================== [#f38a5d 8.8GiB/43GiB(20%) CN:5 DL:35MiB ETA:16m42s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:24:46 2022 *** 2s]mm =============================================================================== [#f38a5d 10GiB/43GiB(24%) CN:5 DL:28MiB ETA:19m59s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:25:47 2022 *** 28s] =============================================================================== [#f38a5d 12GiB/43GiB(29%) CN:5 DL:36MiB ETA:14m19s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:26:47 2022 *** 43s] =============================================================================== [#f38a5d 14GiB/43GiB(33%) CN:5 DL:35MiB ETA:13m57s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:27:48 2022 *** 36s] =============================================================================== [#f38a5d 16GiB/43GiB(37%) CN:5 DL:33MiB ETA:13m51s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:28:49 2022 *** 32s] =============================================================================== [#f38a5d 18GiB/43GiB(42%) CN:5 DL:37MiB ETA:11m31s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:29:49 2022 *** 14s] =============================================================================== [#f38a5d 20GiB/43GiB(46%) CN:5 DL:32MiB ETA:12m2s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:30:50 2022 *** 6s]m =============================================================================== [#f38a5d 22GiB/43GiB(51%) CN:5 DL:36MiB ETA:9m55s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:31:51 2022 *** 9s]m =============================================================================== [#f38a5d 24GiB/43GiB(56%) CN:5 DL:37MiB ETA:8m38s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:32:51 2022 *** 4s] =============================================================================== [#f38a5d 26GiB/43GiB(61%) CN:5 DL:36MiB ETA:7m43s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:33:52 2022 *** 0s] =============================================================================== [#f38a5d 28GiB/43GiB(66%) CN:5 DL:37MiB ETA:6m38s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:34:52 2022 *** 8s] =============================================================================== [#f38a5d 31GiB/43GiB(71%) CN:5 DL:36MiB ETA:5m47s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:35:53 2022 *** s]m =============================================================================== [#f38a5d 32GiB/43GiB(75%) CN:5 DL:17MiB ETA:10m7s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:36:53 2022 *** 1s]m =============================================================================== [#f38a5d 34GiB/43GiB(79%) CN:5 DL:27MiB ETA:5m31s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:37:54 2022 *** 4s] =============================================================================== [#f38a5d 35GiB/43GiB(82%) CN:5 DL:29MiB ETA:4m22s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:38:55 2022 *** 5s] =============================================================================== [#f38a5d 37GiB/43GiB(86%) CN:5 DL:26MiB ETA:3m36s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:39:55 2022 *** 5s] =============================================================================== [#f38a5d 39GiB/43GiB(91%) CN:5 DL:36MiB ETA:1m44s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- *** Download Progress Summary as of Wed Jan 12 20:40:56 2022 *** ]mm =============================================================================== [#f38a5d 41GiB/43GiB(95%) CN:5 DL:34MiB ETA:52s] FILE: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip ------------------------------------------------------------------------------- [#f38a5d 43GiB/43GiB(99%) CN:2 DL:35MiB]0m]m 01/12 20:41:49 [NOTICE] Download complete: /Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= f38a5d|OK | 33MiB/s|/Users/dvanstrien/Documents/daniel/blog/_notebooks/dig19cbooks-embellishments.zip Status Legend: (OK):download completed.

!unzip -q dig19cbooks-embellishments.zipInstall required packages

There are a few packages we’ll need for this work. To start with we’ll need the datasets library.

# hide output

import sys

!{sys.executable} -m pip install datasets Now we have the data downloaded we’ll try and load it into datasets. There are various ways of doing this. To start with we can grab all of the files we need.

from pathlib import Pathfiles = list(Path('embellishments/').rglob("*.jpg"))Since the file path encodes the year of publication for the book the image came from let’s create a function to grab that.

def get_parts(f:Path):

_,year,fname = f.parts

return year, fname📸 Loading the images

The images are fairly large, since this is an experiment we’ll resize them a little using the thumbnail method (this makes sure we keep the same aspect ratio for our images)

from PIL import Image

import iodef load_image(path):

with open(path, 'rb') as f:

im = Image.open(io.BytesIO(f.read()))

im.thumbnail((224,224))

return im im = load_image(files[0])

im

Where is the image 🤔

You may have noticed that the load_image function doesn’t load the filepath into pillow directly. Often we would do Image.open(filepath.jpg). This is done deliberately. If we load it this way when we inspect the resulting image you’ll see that the filepath attribute is empty.

#collapse_output

inspect(im)╭─────────────────────── <class 'PIL.JpegImagePlugin.JpegImageFile'> ───────────────────────╮ │ ╭───────────────────────────────────────────────────────────────────────────────────────╮ │ │ │ <PIL.JpegImagePlugin.JpegImageFile image mode=RGB size=200x224 at 0x7FBBB392D040> │ │ │ ╰───────────────────────────────────────────────────────────────────────────────────────╯ │ │ │ │ app = {'APP0': b'JFIF\x00\x01\x01\x00\x00\x01\x00\x01\x00\x00'} │ │ applist = [('APP0', b'JFIF\x00\x01\x01\x00\x00\x01\x00\x01\x00\x00')] │ │ bits = 8 │ │ custom_mimetype = None │ │ decoderconfig = (2, 0) │ │ decodermaxblock = 65536 │ │ encoderconfig = (False, -1, -1, b'') │ │ encoderinfo = {} │ │ filename = '' │ │ format = 'JPEG' │ │ format_description = 'JPEG (ISO 10918)' │ │ fp = None │ │ height = 224 │ │ huffman_ac = {} │ │ huffman_dc = {} │ │ icclist = [] │ │ im = <ImagingCore object at 0x7fbba120dc10> │ │ info = { │ │ 'jfif': 257, │ │ 'jfif_version': (1, 1), │ │ 'jfif_unit': 0, │ │ 'jfif_density': (1, 1) │ │ } │ │ layer = [(1, 2, 2, 0), (2, 1, 1, 1), (3, 1, 1, 1)] │ │ layers = 3 │ │ map = None │ │ mode = 'RGB' │ │ palette = None │ │ pyaccess = None │ │ quantization = { │ │ 0: [ │ │ 2, │ │ 2, │ │ 1, │ │ 2, │ │ 3, │ │ 6, │ │ 7, │ │ 9, │ │ 2, │ │ 2, │ │ 2, │ │ 3, │ │ 4, │ │ 8, │ │ 8, │ │ 8, │ │ 2, │ │ 2, │ │ 2, │ │ 3, │ │ 6, │ │ 8, │ │ 10, │ │ 8, │ │ 2, │ │ 2, │ │ 3, │ │ 4, │ │ 7, │ │ 12, │ │ 11, │ │ 9, │ │ 3, │ │ 3, │ │ 5, │ │ 8, │ │ 10, │ │ 15, │ │ 14, │ │ 11, │ │ 3, │ │ 5, │ │ 8, │ │ 9, │ │ 11, │ │ 15, │ │ 16, │ │ 13, │ │ 7, │ │ 9, │ │ 11, │ │ 12, │ │ 14, │ │ 17, │ │ 17, │ │ 14, │ │ 10, │ │ 13, │ │ 13, │ │ 14, │ │ 16, │ │ 14, │ │ 14, │ │ 14 │ │ ], │ │ 1: [ │ │ 2, │ │ 3, │ │ 3, │ │ 7, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 3, │ │ 3, │ │ 4, │ │ 9, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 3, │ │ 4, │ │ 8, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 7, │ │ 9, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14, │ │ 14 │ │ ] │ │ } │ │ readonly = 0 │ │ size = (200, 224) │ │ tile = [] │ │ width = 200 │ ╰───────────────────────────────────────────────────────────────────────────────────────────╯

You can also directly see this

im.filename''Pillow usually loads images in a lazy way i.e. it only opens them when they are needed. The filepath is used to access the image. We can see the filename attribute is present if we open it from the filepath

im_file = Image.open(files[0])

im_file.filename'/Users/dvanstrien/Documents/daniel/blog/_notebooks/embellishments/1855/000811462_05_000205_1_The Pictorial History of England being a history of the people as well as a hi_1855.jpg'The reason I don’t want the filename attribute present here is because not only do I want to use datasets to process our images but also store the images. If we pass a Pillow object with the filename attribute datasets will also use this for loading the images. This is often what we’d want but we don’t want this here for reasons we’ll see shortly.

Preparing images for datasets

We can now load our images. What we’ll do is is loop through all our images and then load the information for each image into a dictionary.

from collections import defaultdictdata = defaultdict(list)from tqdm import tqdmfor file in tqdm(files):

try:

#load_image(file)

year, fname = get_parts(file)

data['fname'].append(fname)

data['year'].append(year)

data['path'].append(str(file))

except:

Image.UnidentifiedImageError

pass

100%|████████████████████████████████████████████████████████████████████████████████████████████| 416944/416944 [00:05<00:00, 77169.45it/s]We can now load the from_dict method to create a new dataset.

from datasets import Datasetdataset = Dataset.from_dict(data)We can look at one example to see what this looks like.

dataset[0]{'fname': '000811462_05_000205_1_The Pictorial History of England being a history of the people as well as a hi_1855.jpg',

'year': '1855',

'path': 'embellishments/1855/000811462_05_000205_1_The Pictorial History of England being a history of the people as well as a hi_1855.jpg'}Loading our images

At the moment our dataset has the filename and full path for each image. However, we want to have an actual image loaded into our dataset. We already have a load_image function. This gets us most of the way there but we might also want to add some ability to deal with image errors. The datasets library has gained increased uspport for handling None types- this includes support for None types for images see pull request 3195.

We’ll wrap our load_image function in a try block, catch a Image.UnidentifiedImageError error and return None if we can’t load the image.

def try_load_image(filename):

try:

image = load_image(filename)

if isinstance(image, Image.Image):

return image

except Image.UnidentifiedImageError:

return None%%time

dataset = dataset.map(lambda example: {"img": try_load_image(example['path'])},writer_batch_size=50)CPU times: user 51min 42s, sys: 4min 31s, total: 56min 13s

Wall time: 1h 10min 31sLet’s see what this looks like

datasetDataset({

features: ['fname', 'year', 'path', 'img'],

num_rows: 416944

})We have an image column, let’s check the type of all our features

dataset.features{'fname': Value(dtype='string', id=None),

'year': Value(dtype='string', id=None),

'path': Value(dtype='string', id=None),

'img': Image(id=None)}This is looking great already. Since we might have some None types for images let’s get rid of these.

dataset = dataset.filter(lambda example: example['img'] is not None)datasetDataset({

features: ['fname', 'year', 'path', 'img'],

num_rows: 416935

})You’ll see we lost a few rows by doing this filtering. We should now just have images which are successfully loaded.

If we access an example and index into the img column we’ll see our image 😃

dataset[10]['img']

Push all the things to the hub!

One of the super awesome things about the huggingface ecosystem is the huggingface hub. We can use the hub to access models and datasets. Often this is used for sharing work with others but it can also be a useful tool for work in progress. The datasets library recently added a push_to_hub method that allows you to push a dataset to the hub with minimal fuss. This can be really helpful by allowing you to pass around a dataset with all the transformers etc. already done.

When I started playing around with this feature I was also keen to see if it could be used as a way of ‘bundling’ everything together. This is where I noticed that if you push a dataset containing images which have been loaded in from filepaths by pillow the version on the hub won’t have the images attached. If you always have the image files in the same place when you work with the dataset then this doesn’t matter. If you want to have the images stored in the parquet file(s) associated with the dataset we need to load it without the filename attribute present (there might be another way of ensuring that datasets doesn’t rely on the image file being on the file system – if you of this I’d love to hear about it).

Since we loaded our images this way when we download the dataset from the hub onto a different machine we have the images already there 🤗

For now we’ll push the dataset to the hub and keep them private initially.

dataset.push_to_hub('davanstrien/embellishments', private=True)The repository already exists: the `private` keyword argument will be ignored.Switching machines

At this point I’ve created a dataset and moved it to the huggingface hub. This means it is possible to pickup the work/dataset elsewhere.

In this particular example, having access to a GPU is important. So the next parts of this notebook are run on Colab instead of locally on my laptop.

We’ll need to login since the dataset is currently private.

!huggingface-cli login _| _| _| _| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _|_|_|_| _|_| _|_|_| _|_|_|_|

_| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_|_|_|_| _| _| _| _|_| _| _|_| _| _| _| _| _| _|_| _|_|_| _|_|_|_| _| _|_|_|

_| _| _| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_| _| _|_| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _| _| _| _|_|_| _|_|_|_|

To login, `huggingface_hub` now requires a token generated from https://huggingface.co/settings/token.

(Deprecated, will be removed in v0.3.0) To login with username and password instead, interrupt with Ctrl+C.

Token:

Login successful

Your token has been saved to /root/.huggingface/token

Authenticated through git-credential store but this isn't the helper defined on your machine.

You might have to re-authenticate when pushing to the Hugging Face Hub. Run the following command in your terminal in case you want to set this credential helper as the default

git config --global credential.helper store

Once we’ve done this we can load our dataset

from datasets import load_dataset

dataset = load_dataset("davanstrien/embellishments", use_auth_token=True)Using custom data configuration davanstrien--embellishments-543da8e15e8f0242Downloading and preparing dataset None/None (download: 2.38 GiB, generated: 2.50 GiB, post-processed: Unknown size, total: 4.88 GiB) to /root/.cache/huggingface/datasets/parquet/davanstrien--embellishments-543da8e15e8f0242/0.0.0/1638526fd0e8d960534e2155dc54fdff8dce73851f21f031d2fb9c2cf757c121...Dataset parquet downloaded and prepared to /root/.cache/huggingface/datasets/parquet/davanstrien--embellishments-543da8e15e8f0242/0.0.0/1638526fd0e8d960534e2155dc54fdff8dce73851f21f031d2fb9c2cf757c121. Subsequent calls will reuse this data.Creating embeddings 🕸

We now have a dataset with a bunch of images in it. To begin creating our image search app we need to create some embeddings for these images. There are various ways in which we can try and do this but one possible way is to use the clip models via the sentence_transformers library. The clip model from OpenAI learns a joint representation for both images and text which is very useful for what we want to do since we want to be able to input text and get back an image. We can download the model using the SentenceTransformer class.

from sentence_transformers import SentenceTransformer, util

model = SentenceTransformer('clip-ViT-B-32')ftfy or spacy is not installed using BERT BasicTokenizer instead of ftfy.This model will encode either an image or some text returning an embedding. We can use the map method to encode all our images.

ds_with_embeddings = dataset.map(

lambda example: {'embeddings':model.encode(example['img'],device='cuda')},

batch_size=32)We can “save” our work by pushing back to the hub

ds_with_embeddings.push_to_hub('davanstrien/embellishments', private=True)Pushing split train to the Hub.

The repository already exists: the `private` keyword argument will be ignored.If we were to move to a different machine we could grab our work again by loading it from the hub 😃

from datasets import load_dataset

ds_with_embeddings = load_dataset("davanstrien/embellishments", use_auth_token=True)Using custom data configuration davanstrien--embellishments-c2c1f142f272db02Downloading and preparing dataset None/None (download: 3.19 GiB, generated: 3.30 GiB, post-processed: Unknown size, total: 6.49 GiB) to /root/.cache/huggingface/datasets/parquet/davanstrien--embellishments-c2c1f142f272db02/0.0.0/1638526fd0e8d960534e2155dc54fdff8dce73851f21f031d2fb9c2cf757c121...Dataset parquet downloaded and prepared to /root/.cache/huggingface/datasets/parquet/davanstrien--embellishments-c2c1f142f272db02/0.0.0/1638526fd0e8d960534e2155dc54fdff8dce73851f21f031d2fb9c2cf757c121. Subsequent calls will reuse this data.We now have a new column which contains the embeddings for our images. We could manually search through these and compare it to some input embedding but datasets has an add_faiss_index method. This uses the faiss library to create an efficient index for searching embeddings. For more background on this library you can watch this youtube video

ds_with_embeddings['train'].add_faiss_index(column='embeddings')Dataset({

features: ['fname', 'year', 'path', 'img', 'embeddings'],

num_rows: 416935

})Image search

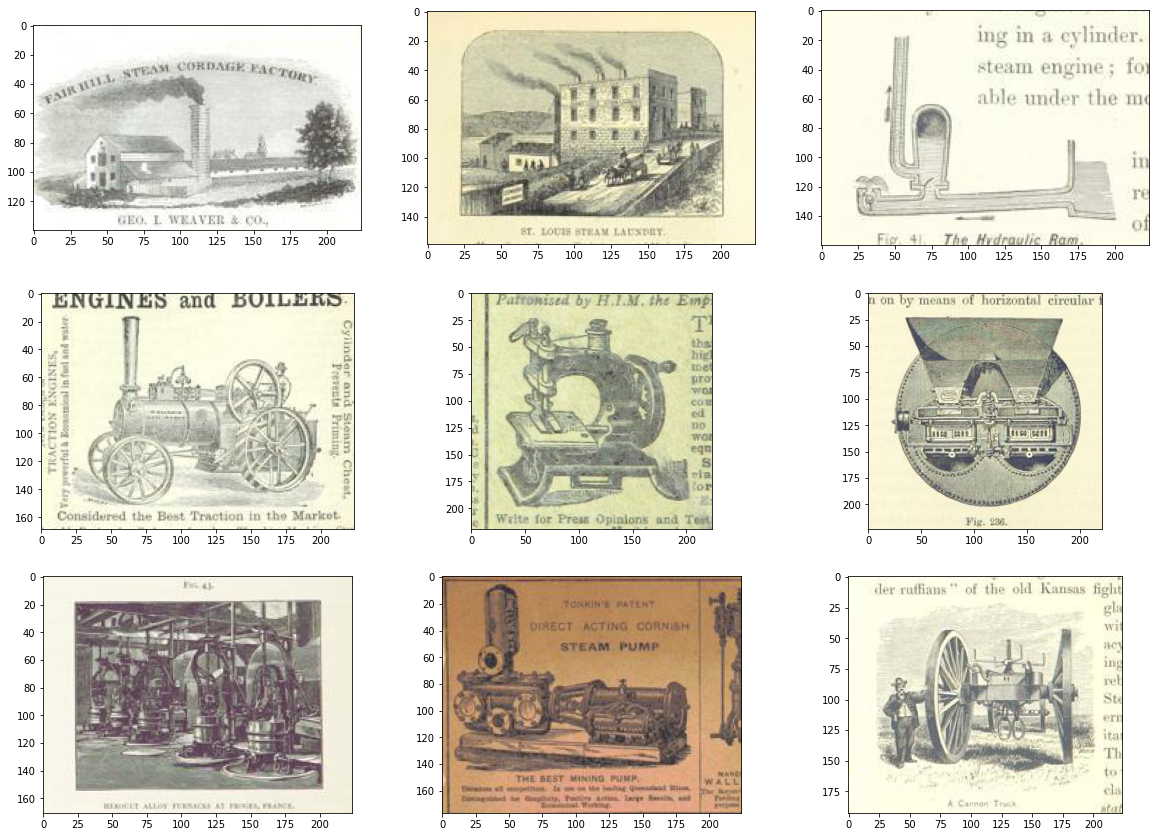

We now have everything we need to create a simple image search. We can use the same model we used to encode our images to encode some input text. This will act as the prompt we try and find close examples for. Let’s start with ‘a steam engine’.

prompt = model.encode("A steam engine")We can see what this looks like

#collapse_output

promptarray([-4.68399227e-02, -1.25237599e-01, 1.25164926e-01, 1.23583399e-01,

5.36684394e-02, -2.80560672e-01, 2.89631691e-02, -9.63450074e-01,

-1.52872965e-01, -3.83016393e-02, 9.01967064e-02, -5.84575422e-02,

1.04646191e-01, 2.44443744e-01, 1.38233244e-01, -3.97525132e-02,

4.35137331e-01, -4.26820181e-02, -8.48560631e-02, -6.94137365e-02,

6.25562131e-01, 3.68572891e-01, 3.34365219e-01, -3.37864846e-01,

-2.53632635e-01, -3.01467925e-01, -1.56484097e-01, 2.94869483e-01,

-1.89204350e-01, -1.13111593e-01, -1.46938376e-02, 2.97405511e-01,

-2.92487741e-01, 3.56931627e-01, 1.44009173e-01, 7.53008351e-02,

-1.02462962e-01, 2.26309776e-01, -3.77506733e-01, 4.75439876e-02,

-8.52131844e-03, 7.40285963e-03, -1.36876494e-01, 1.12041593e-01,

3.65501434e-01, -9.37360153e-02, 1.00782610e-01, -3.86462212e-01,

-1.39045209e-01, -2.31989667e-01, -2.62126565e-01, 8.75059143e-02,

-9.87479314e-02, 7.31039718e-02, -5.99793851e-01, -5.31058311e-01,

1.46116272e-01, 1.58094600e-01, -5.31955510e-02, 1.91384852e-01,

1.16943717e-01, -4.84316409e-01, -1.38332695e-01, 1.76510841e-01,

-2.17938051e-01, -1.00890748e-01, -4.45051998e-01, 2.71521568e-01,

-1.12926617e-01, -3.37198257e-01, -1.34169117e-01, -7.38745630e-02,

-1.23398125e-01, 3.62316787e-01, 9.09636840e-02, -3.20305794e-01,

5.82561374e-01, -3.51719618e-01, -1.05368085e-02, -3.90766770e-01,

-3.18382740e-01, 5.37567735e-02, -6.24650240e-01, 2.18755245e-01,

3.51645321e-01, -3.01214606e-02, -8.49011913e-02, -3.29971045e-01,

2.13861912e-01, -1.10820271e-02, -3.52595486e-02, -3.70746814e-02,

-1.35805202e+00, 3.35692495e-01, -2.83742435e-02, -1.39813796e-01,

3.66676860e-02, 2.62957454e-01, 2.52151459e-01, -6.14355244e-02,

2.01516539e-01, -4.14117992e-01, -2.58466527e-02, 1.06067717e-01,

3.14981639e-02, -1.45749748e-02, -5.94865866e-02, 2.55122900e-01,

-3.30369681e-01, 6.39781356e-04, 1.65513411e-01, 7.37893358e-02,

-4.69729975e-02, 3.36943477e-01, 4.38236594e-02, -4.21047479e-01,

-1.14590853e-01, 1.49240956e-01, 1.34405270e-01, 3.97198983e-02,

-1.20852023e-01, -7.22009778e-01, 1.17442548e-01, -7.35135227e-02,

5.45979321e-01, 1.76602621e-02, 6.59747049e-02, 8.00846070e-02,

3.87920737e-01, -3.57501693e-02, 1.19425125e-01, -2.89906412e-01,

-2.84183323e-02, 5.73142338e+00, 1.24172762e-01, -1.59575850e-01,

-5.33452034e-02, -1.77120879e-01, 2.14188576e-01, -3.49292234e-02,

-4.76958305e-02, -1.05941862e-01, -1.58911452e-01, 1.87136307e-02,

-2.16531213e-02, 1.37230158e-01, 4.62583750e-02, 2.19857365e-01,

3.41235586e-02, -3.29913348e-02, 9.88523886e-02, -1.30611554e-01,

-1.53349772e-01, 2.20886514e-01, 1.53534949e-01, -4.27889526e-01,

-4.12531018e-01, 2.70397663e-01, 1.88448757e-01, 4.66853082e-02,

2.63707846e-01, -9.56512764e-02, -3.26435685e-01, -1.24463499e-01,

4.49354291e-01, -4.17843968e-01, -5.27932420e-02, -1.28314078e-01,

-1.19249836e-01, -1.19294032e-01, 3.73742878e-01, 2.07954675e-01,

-1.41953439e-01, 3.89361024e-01, -1.99988037e-01, 3.62350583e-01,

-8.77851099e-02, -1.08132876e-01, -9.82177258e-03, 1.80039972e-01,

1.35815665e-02, 3.20201695e-01, -1.74580999e-02, -1.08204901e-01,

-2.29793668e-01, -2.09628209e-01, 4.13929313e-01, -1.73814282e-01,

-4.10574347e-01, -1.59104809e-01, -6.01581074e-02, 6.22577034e-02,

-3.67693931e-01, 1.85215116e-01, -2.03229636e-01, -8.92911255e-02,

-4.25831258e-01, -1.45366028e-01, 2.45514482e-01, -1.65927559e-01,

-2.54413635e-02, -2.91361034e-01, -8.33243579e-02, -4.79405448e-02,

6.35769814e-02, 8.04642588e-02, 5.31384498e-02, 2.50850171e-02,

-8.98692310e-02, 4.97757077e-01, 6.37893498e-01, -2.58815974e-01,

4.14507166e-02, 9.45882648e-02, -9.01474580e-02, -9.18833911e-02,

-2.48883665e-01, 9.16991904e-02, -2.93194801e-01, -1.49350330e-01,

7.20755905e-02, -9.76985693e-03, -4.70465049e-02, -2.78597653e-01,

-7.63949528e-02, -3.14843357e-01, 3.18657011e-01, -3.06758255e-01,

-2.06573829e-01, -2.20574200e-01, 1.81351285e-02, 2.57636189e-01,

2.39799708e-01, -2.31798366e-01, -8.34087562e-03, 6.13241374e-01,

-2.10393399e-01, 2.52263397e-01, 1.66839644e-01, -2.71174073e-01,

2.31348664e-01, 1.15150154e-01, 2.23357946e-01, 1.37287825e-01,

-8.56669843e-02, 3.43877286e-01, -1.09687179e-01, 3.24211985e-01,

-4.53893900e-01, -2.30711773e-01, -2.48840563e-02, 1.80964172e-01,

4.73472506e-01, 5.22104502e-01, 9.96741354e-02, 1.87694326e-01,

2.41730541e-01, -2.78556377e-01, 7.48419687e-02, 2.80560136e-01,

-1.25464931e-01, 1.51028201e-01, 1.39490321e-01, 5.16689643e-02,

5.30310348e-02, 1.61938250e-01, 3.72225225e-01, -4.49403644e-01,

1.19608052e-01, 2.43661910e-01, 9.89501849e-02, 2.74168640e-01,

4.84039634e-02, -1.19901955e-01, -1.57916725e-01, -2.20868304e-01,

1.03498720e-01, 3.99750322e-01, 1.03758566e-01, 8.08660090e-02,

1.68566346e-01, -3.42532575e-01, 2.51480471e-02, 1.23976640e-01,

-2.10433707e-01, 2.81242996e-01, 2.39082754e-01, 2.01786831e-02,

4.61297363e-01, 5.62884361e-02, 2.15039015e-01, -1.65275872e-01,

1.01690084e-01, -4.50959802e-03, -4.46137577e-01, 4.31368239e-02,

-4.51804757e-01, -2.26415813e-01, 1.31732523e-01, -2.00945437e-02,

1.77461311e-01, -1.64631978e-02, 4.40553159e-01, 1.41214132e-01,

3.42677176e-01, -2.23303795e-01, -2.10693538e-01, 1.94943929e-03,

-2.33348235e-01, 4.64889407e-03, 5.71020804e-02, 1.99669391e-01,

5.72273111e+00, -2.95036316e-01, -5.13455391e-01, 1.87334672e-01,

4.09545094e-01, -7.09135592e-01, 1.89325869e-01, -6.14660345e-02,

3.29098284e-01, 2.82059342e-01, 3.48631829e-01, -9.74263549e-02,

-4.83064592e-01, -1.35906041e-04, 3.44773471e-01, -3.56532484e-01,

5.36619090e-02, -1.85481656e+00, 3.87955368e-01, -1.83132842e-01,

-1.34021699e-01, -1.84214741e-01, 6.85371086e-02, 1.10808179e-01,

-6.64586425e-02, 6.85550272e-02, 1.81145087e-01, -2.15605676e-01,

-1.09192222e-01, -7.09795505e-02, 1.77813157e-01, -2.76037157e-01,

2.19184965e-01, -3.35977226e-01, 1.01434961e-01, 4.24576849e-02,

6.37579709e-04, -1.23296835e-01, -6.84914351e-01, 5.02923191e-01,

2.19384342e-01, 4.92008686e-01, -1.94621727e-01, -2.48740703e-01,

-1.32586688e-01, -1.77171156e-02, -4.71081585e-03, 1.58246011e-01,

-3.27363521e-01, -3.30681592e-01, -2.68038437e-02, -1.85811728e-01,

-1.84623767e-02, -3.22798610e-01, 3.07092518e-01, 1.06014945e-01,

3.20541680e-01, -2.55453944e-01, -2.30755419e-01, -1.19963072e-01,

-2.04865620e-01, 4.02828932e-01, -3.01321566e-01, 4.01021272e-01,

-3.02002877e-01, 1.42853945e-01, 2.94484437e-01, -2.06042349e-01,

-3.03069353e-01, -2.83185482e-01, -1.03388466e-02, -1.03018671e-01,

4.25990820e-02, -2.94244856e-01, 3.19168091e-01, 3.89839858e-02,

-1.95185751e-01, -9.88216847e-02, -4.01682496e-01, 4.60841119e-01,

1.40236557e-01, 1.49914265e-01, -4.25037295e-01, 2.63067722e-01,

1.31706342e-01, 3.21884871e-01, -2.39963964e-01, 4.01636630e-01,

-2.55293436e-02, -7.36447945e-02, -8.34826380e-03, 1.11923724e-01,

-2.71807779e-02, -3.35412771e-02, 2.33933121e-01, 3.33954431e-02,

3.56481314e-01, -8.09433609e-02, -1.82573602e-01, 1.75429478e-01,

-3.23554099e-01, 9.15928558e-03, 1.54344559e-01, 2.50909716e-01,

1.45193070e-01, 2.48686507e-01, -9.65276286e-02, -2.73654372e-01,

5.46456315e-02, 1.83476061e-02, -1.61773548e-01, -2.97708124e-01,

-1.74462572e-01, -1.14246726e-01, 2.32043359e-02, 1.98346555e-01,

2.31929243e-01, -9.74937603e-02, -2.26448864e-01, -6.31427839e-02,

2.23113708e-02, -3.72859359e-01, 2.47197479e-01, -3.65516663e-01,

-3.24409932e-01, 1.83964625e-01, -3.17104161e-03, -2.66632497e-01,

-1.86478943e-01, 1.11006252e-01, -3.93829793e-02, -3.11926544e-01,

2.88751245e-01, 2.66543150e-01, -1.74334750e-01, -4.89967108e-01,

3.38638097e-01, 2.47487854e-02, -3.66539627e-01, 5.78703731e-03,

1.11349493e-01, -2.60909855e-01, -4.34429348e-02, -4.47440267e-01,

2.80311018e-01, -6.46181554e-02, -2.93976814e-02, -3.02857161e-01,

2.10391358e-03, -3.70345414e-02, 7.15476647e-02, 4.39802915e-01,

2.11817563e-01, 6.87709302e-02, 5.68117499e-01, -2.40518659e-01,

2.59056687e-01, -1.32284269e-01, 1.28509507e-01, -1.94875181e-01,

-2.68568173e-02, -7.85035193e-02, -2.49556839e-01, 1.44016743e-01,

-2.98127495e-02, -1.41643599e-01, 1.77106410e-02, 1.83453292e-01,

-1.39113069e-02, -1.97993904e-01, 3.07995021e-01, 3.31339300e-01,

2.07652867e-01, 1.27762616e-01, 2.26422980e-01, 1.94940835e-01,

-4.90801185e-02, -5.35061479e-01, -2.99495637e-01, 3.68627608e-02,

-4.15636569e-01, 6.44698918e-01, -4.50457260e-02, 7.05935210e-02,

-1.11036956e-01, -1.42384216e-01, -7.05560222e-02, 2.86495592e-03,

3.45641613e-01, -5.66974521e-01, -1.34682715e-01, -2.59017110e-01,

3.27597320e-01, 1.04890786e-01, -3.11988890e-01, -2.32627541e-01,

3.14653963e-02, 2.76591361e-01, 1.66302443e-01, -2.39517853e-01],

dtype=float32)We can use another method from the datasets library get_nearest_examples to get images which have an embedding close to our input prompt embedding. We can pass in a number of results we want to get back.

scores, retrieved_examples = ds_with_embeddings['train'].get_nearest_examples('embeddings', prompt,k=9)We can index into the first example this retrieves:

retrieved_examples['img'][0]

This isn’t quite a steam engine but it’s also not a completely weird result. We can plot the other results to see what was returned.

import matplotlib.pyplot as pltplt.figure(figsize=(20, 20))

columns = 3

for i in range(9):

image = retrieved_examples['img'][i]

plt.subplot(9 / columns + 1, columns, i + 1)

plt.imshow(image)

Some of these results look fairly close to our input prompt. We can wrap this in a function so can more easily play around with different prompts

def get_image_from_text(text_prompt, number_to_retrieve=9):

prompt = model.encode(text_prompt)

scores, retrieved_examples = ds_with_embeddings['train'].get_nearest_examples('embeddings', prompt,k=number_to_retrieve)

plt.figure(figsize=(20, 20))

columns = 3

for i in range(9):

image = retrieved_examples['img'][i]

plt.title(text_prompt)

plt.subplot(9 / columns + 1, columns, i + 1)

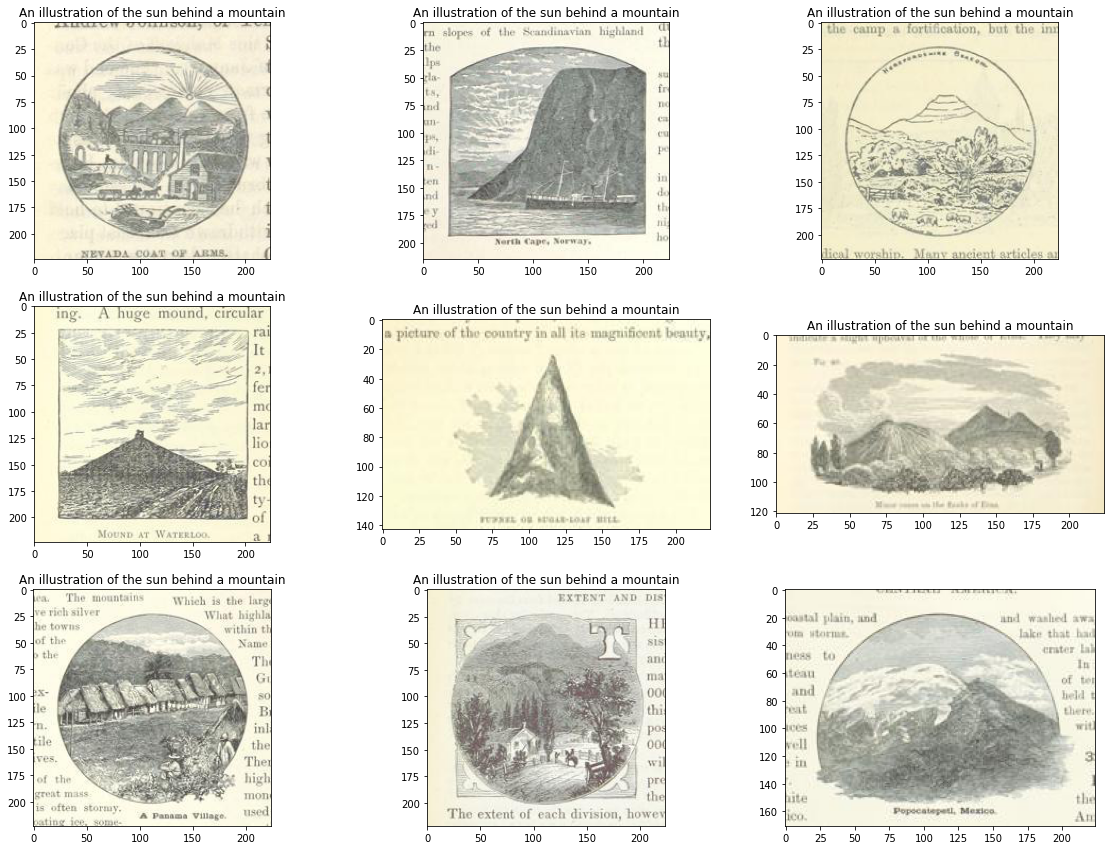

plt.imshow(image)get_image_from_text("An illustration of the sun behind a mountain")

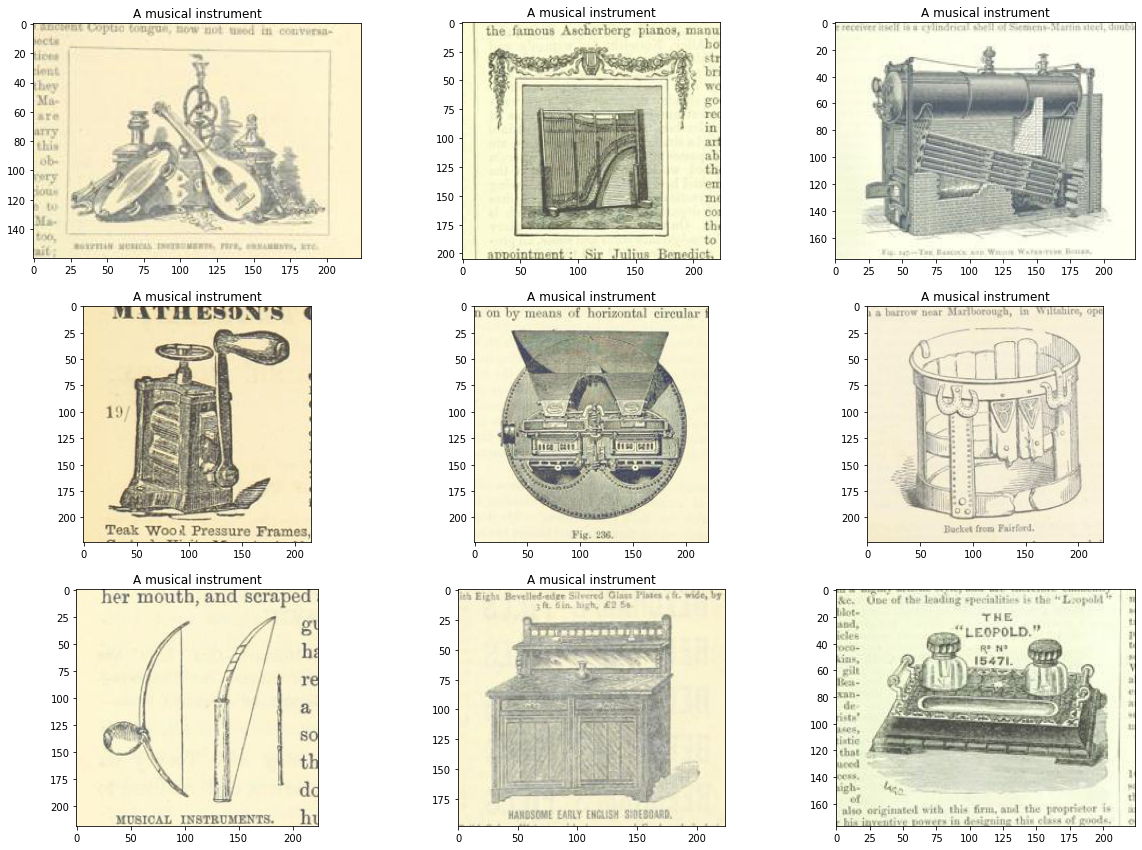

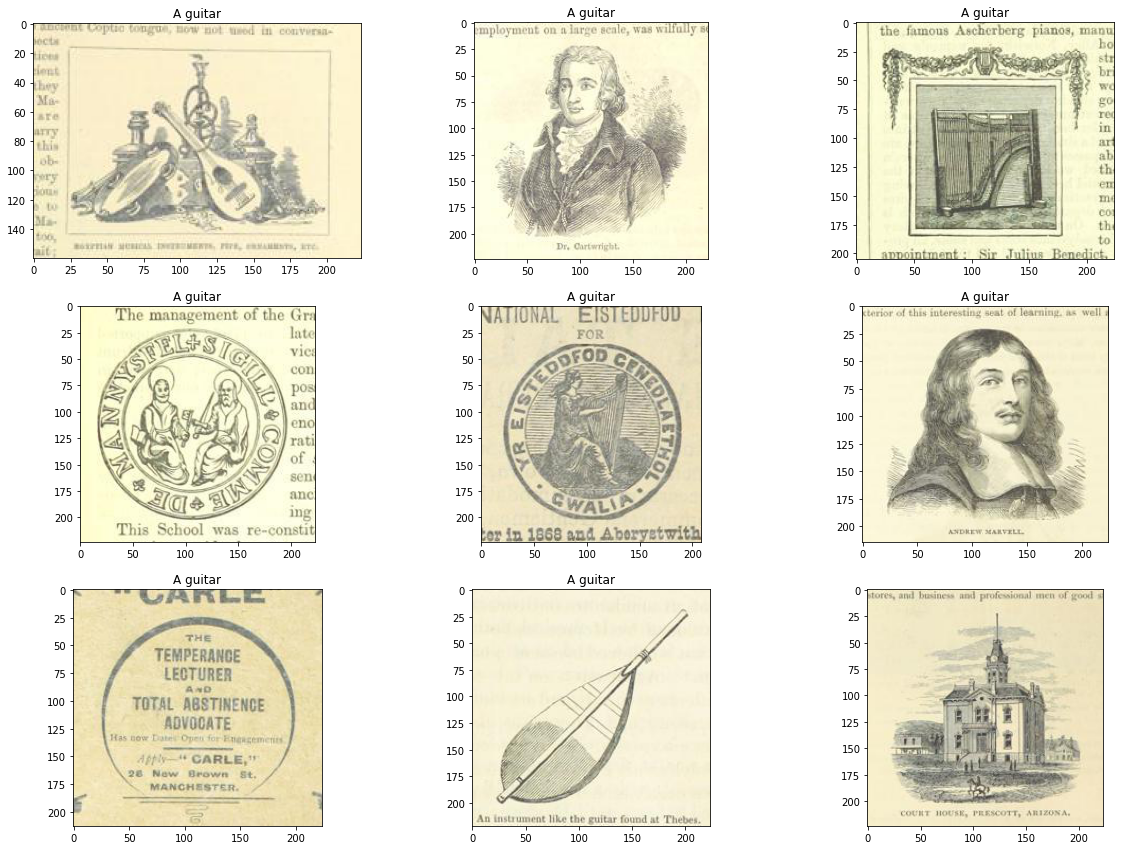

Trying a bunch of prompts ✨

Now we have a function for getting a few results we can try a bunch of different prompts:

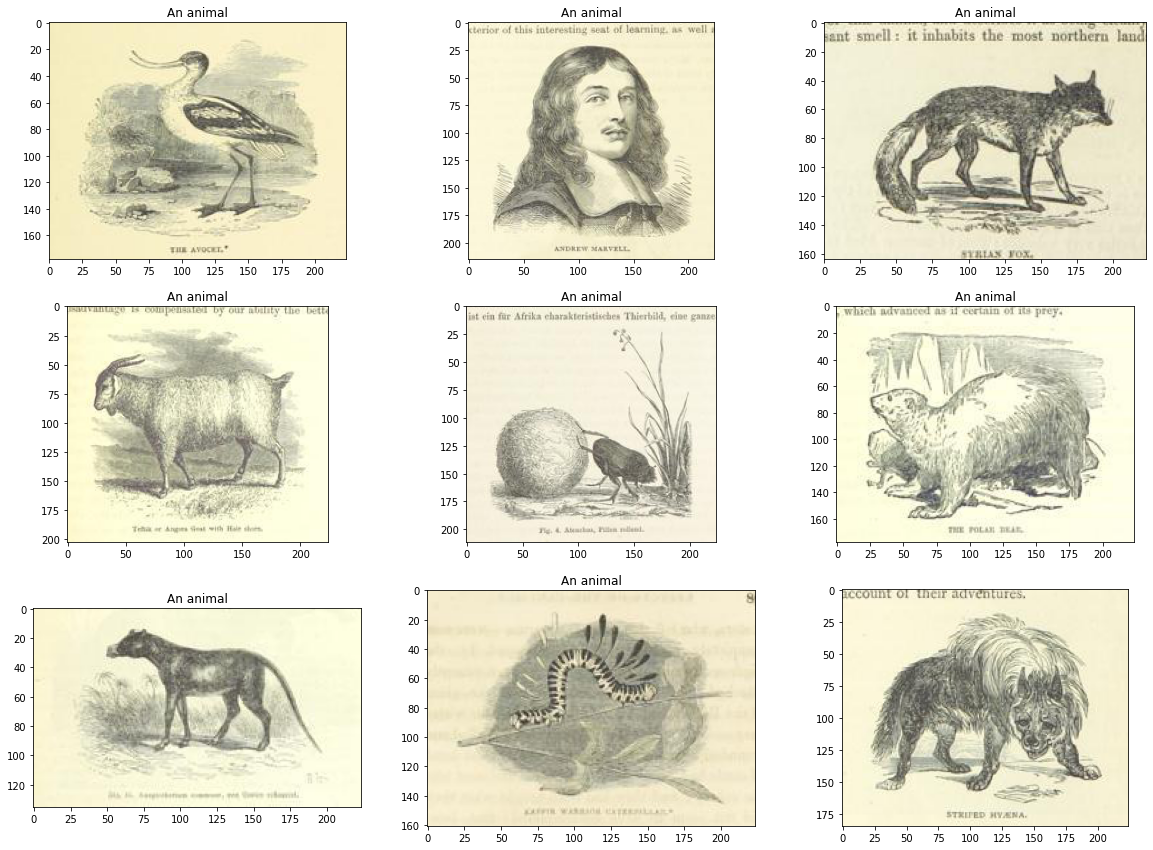

For some of these I’ll choose prompts which are a broad ‘category’ i.e. ‘a musical instrument’ or ‘an animal’, others are specific i.e. ‘a guitar’.

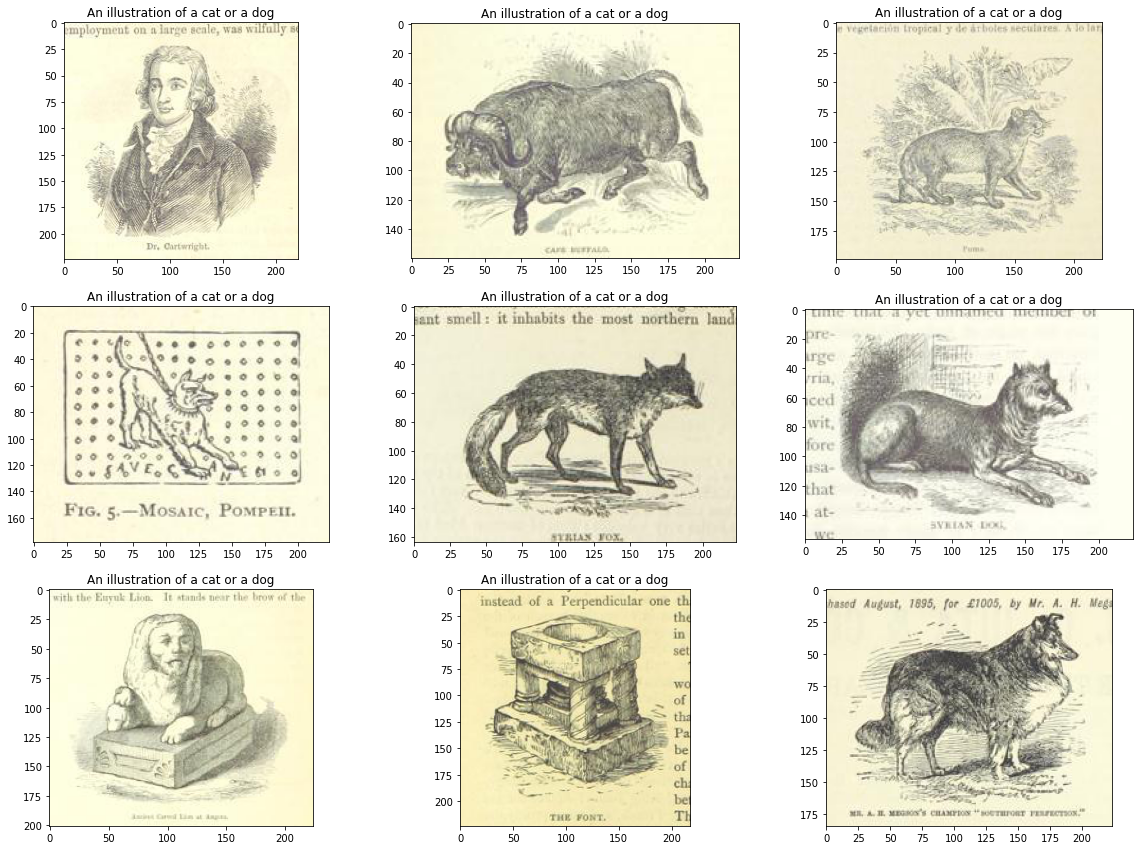

Out of interest I also tried a boolean operator: “An illustration of a cat or a dog”.

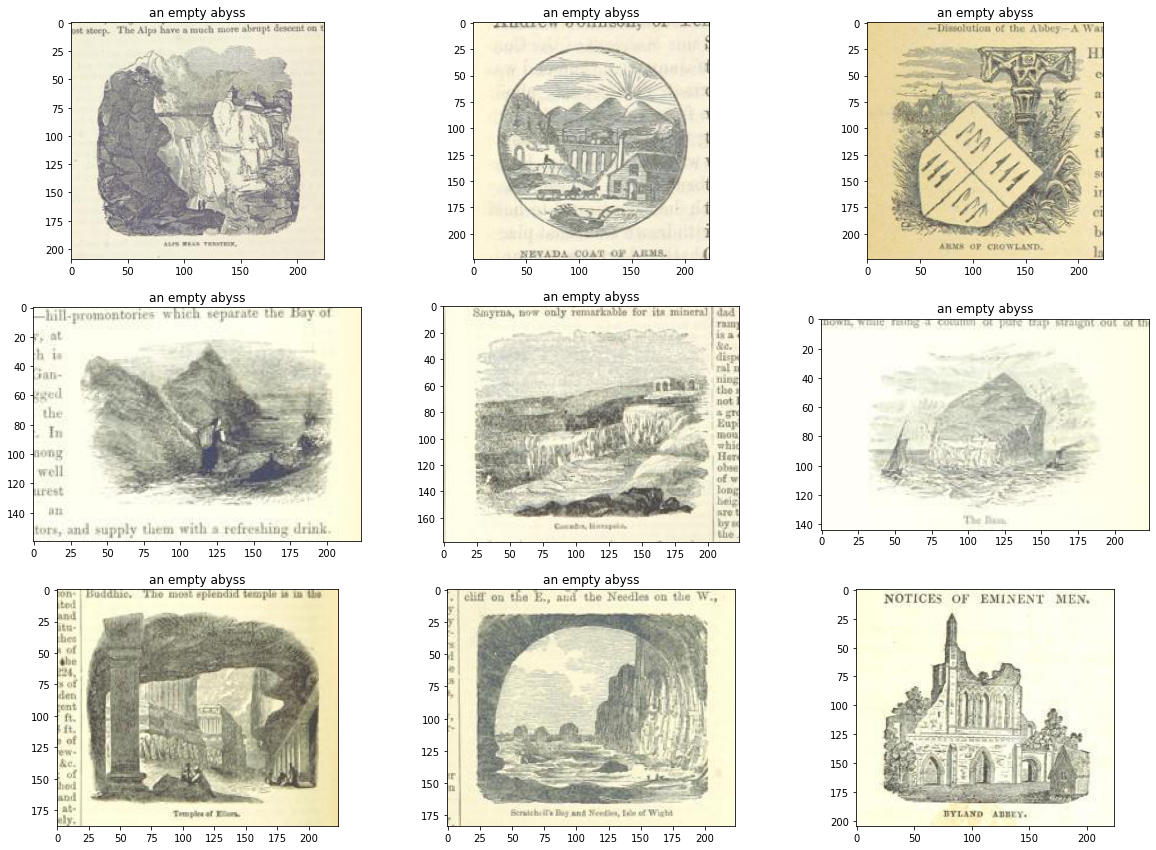

Finally I tried something a little more abstract: “an empty abyss”

prompts = ["A musical instrument", "A guitar", "An animal", "An illustration of a cat or a dog", "an empty abyss"]for prompt in prompts:

get_image_from_text(prompt)

We can see these results aren’t always right but they are usually some reasonable results in there. It already seems like this could be useful for searching for a the semantic content of an image in this dataset. However we might hold off on sharing this as is…

Creating a huggingface space? 🤷🏼

One obvious next step for this kind of project is to create a hugginface spaces demo. This is what I’ve done for other models

It was a fairly simple process to get a Gradio app setup from the point we got to here. Here is a screenshot of this app.

However, I’m a little bit vary about making this public straightaway. Looking at the model card for the CLIP model we can look at the primary intended uses:

Primary intended uses

We primarily imagine the model will be used by researchers to better understand robustness, generalization, and other capabilities, biases, and constraints of computer vision models. source

This is fairly close to what we are interested in here. Particularly we might be interested in how well the model deals with the kinds of images in our dataset (illustrations from mostly 19th century books). The images in our dataset are (probably) fairly different from the training data. The fact that some of the images also contain text might help CLIP since it displays some OCR ability.

However, looking at the out-of-scope use cases in the model card:

Out-of-Scope Use Cases

Any deployed use case of the model - whether commercial or not - is currently out of scope. Non-deployed use cases such as image search in a constrained environment, are also not recommended unless there is thorough in-domain testing of the model with a specific, fixed class taxonomy. This is because our safety assessment demonstrated a high need for task specific testing especially given the variability of CLIP’s performance with different class taxonomies. This makes untested and unconstrained deployment of the model in any use case currently potentially harmful. source

suggests that ‘deployment’ is not a good idea. Whilst the results I got are interesting I haven’t played around with the model enough yet (and haven’t done anything more systematic to evaluate its performance and biases). Another additional consideration is the target dataset itself. The images are drawn from books covering a variety of subjects and time periods. There are plenty of books which represent colonial attitudes and as a result some of the images included may represent certain groups of people in a negative way. This could potentially be a bad combo with a tool which allows any arbitrary text input to be encoded as a prompt.

There may be ways around this issue but this will require a bit more thought.

Conclusion

Although I don’t have a nice demo to show for it I did get to work out a few more details of how datasets handles images. I’ve already used it to train some classification models and everything seems to be working smoothly. The ability to push images around on the hub will be super useful for many use cases too.

I plan to spend a bit more time thinking about whether there is a better way of sharing a clip powered image search for the BL book images or not…

{{ “If you aren’t familiar with datasets. A feature represents the datatype for different data you can have inside a dataset. For example you my have int32, timestamps and strings. You can read more about how features work in the docs” | fndetail: 1}}