%pip install openai langfuse --quietNote: you may need to restart the kernel to use updated packages.Daniel van Strien

April 5, 2024

Note: you may need to restart the kernel to use updated packages.LANGFUSE_SECRET_KEY="sk-lf-..."

LANGFUSE_PUBLIC_KEY="pk-lf-..."

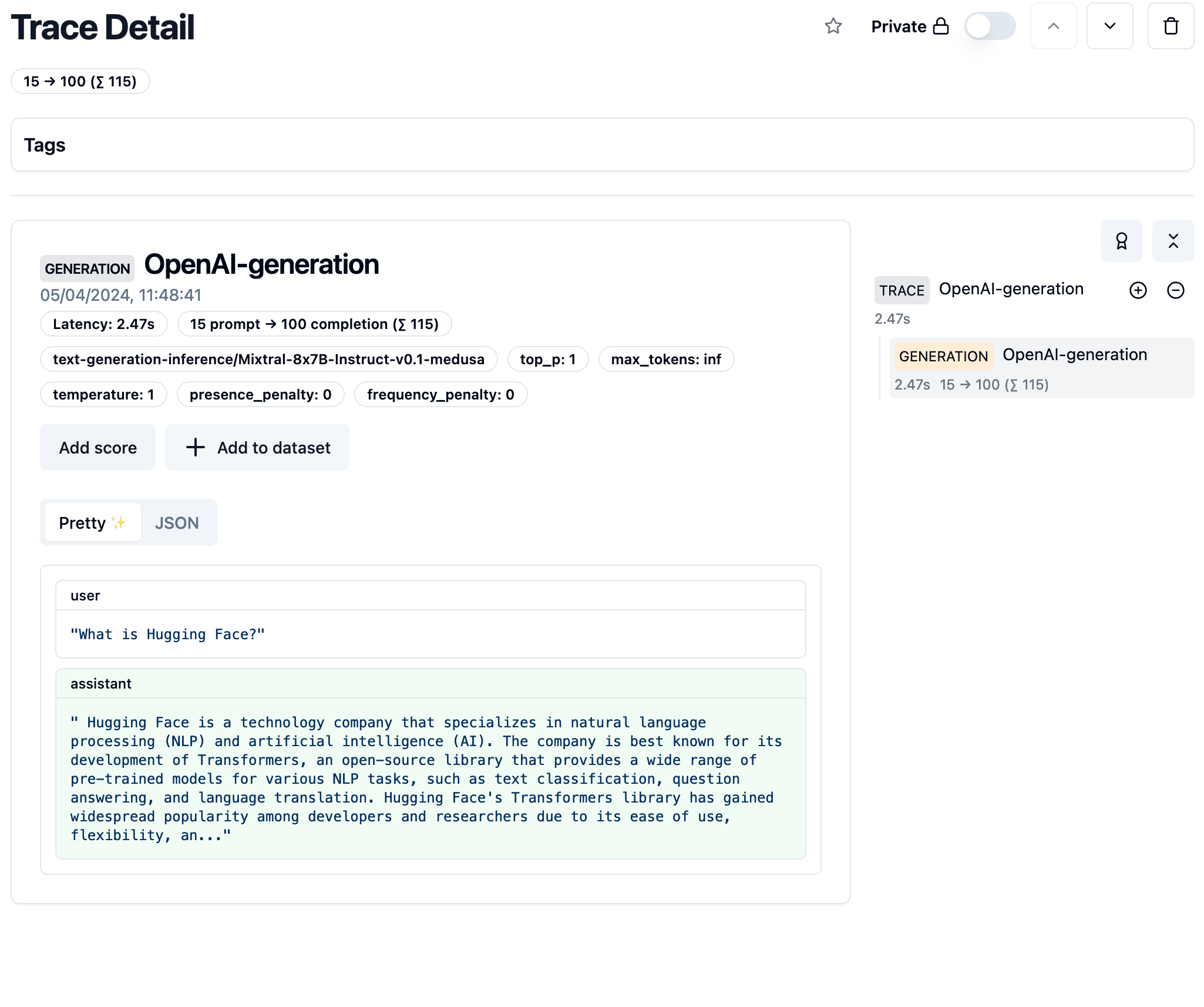

LANGFUSE_HOST="https://cloud.langfuse.com" # 🇪🇺 EU regionChatCompletion(id='', choices=[Choice(finish_reason='length', index=0, logprobs=None, message=ChatCompletionMessage(content=" Hugging Face is a technology company that specializes in natural language processing (NLP) and artificial intelligence (AI). The company is best known for its development of Transformers, an open-source library that provides a wide range of pre-trained models for various NLP tasks, such as text classification, question answering, and language translation.\n\nHugging Face's Transformers library has gained widespread popularity among developers and researchers due to its ease of use, flexibility, and", role='assistant', function_call=None, tool_calls=None))], created=1712314124, model='text-generation-inference/Mixtral-8x7B-Instruct-v0.1-medusa', object='text_completion', system_fingerprint='1.4.3-sha-e6bb3ff', usage=CompletionUsage(completion_tokens=100, prompt_tokens=15, total_tokens=115))