import torchSearching for machine learning models using semantic search

The Hugging Face model hub has (at the time of the last checking) 60,509 models publicly available. Some of these models are useful as base models for further fine-tuning; these include your classics like bert-base-uncased.

The hub also has more obscure indie hits that might already do a good job on your desired downstream task or be a closer start. For example, if one wanted to classify the genre of 18th Century books, it might make sense to start with a model for classifying 19th Century books.

Finding candidate models

Ideally, we’d like a quick way to identify if a model might already do close to what we want. From there, we would likely want to review a bunch of other info about the model before deciding if it might be helpful for us or not.

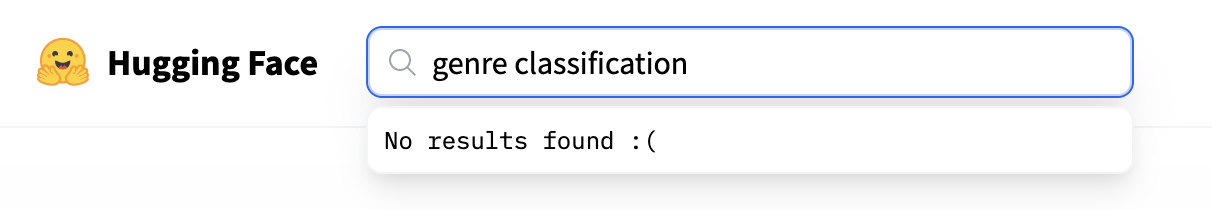

Unfortunately, finding suitable models on the hub isn’t always that easy. Even knowing that models for genre classification exist on the hub, we don’t find any results.

It’s not documented exactly how the search on the hub works, but it seems to be based mainly on the model’s name rather than the README or other information. In this blog post, I will continue some previous experiments with embeddings to see if there might be different ways in which we could identify potential models.

This will be a very rough experiment and is more about establishing whether this is an avenue worth exploring rather than a fully fleshed-out approach.

First install some libraries we’ll use:

deps = ["datasets" ,"sentence-transformers", "rich['jupyter']", "requests"]

if torch.cuda.is_available():

deps.append("faiss-gpu")

else:

deps.append("faise-cpu")%%capture

!pip install {" ".join(deps)} --upgrade!git config --global credential.helper storeThese days I almost always have the rich extension loaded!

%load_ext richUsing the huggingface_hub API to download some model metadata

Our goal is to see if we might be able to find suitable models more efficiently using some form of semantic search (i.e. using embeddings). To do this, we should grab some model data from the hub. The easiest way to do this is using the hub API.

from huggingface_hub import hf_api

import re

from rich import printapi = hf_api.HfApi()api<huggingface_hub.hf_api.HfApi object at 0x7f63832ff810>

We can take a look at some example models

all_models = api.list_models()

all_models[:3][ ModelInfo: { modelId: hfl/chinese-macbert-base sha: None lastModified: None tags: [] pipeline_tag: fill-mask siblings: None private: False author: None config: None id: hfl/chinese-macbert-base }, ModelInfo: { modelId: bert-base-uncased sha: None lastModified: None tags: [] pipeline_tag: fill-mask siblings: None private: False author: None config: None id: bert-base-uncased }, ModelInfo: { modelId: microsoft/deberta-base sha: None lastModified: None tags: [] pipeline_tag: None siblings: None private: False author: None config: None id: microsoft/deberta-base } ]

For a particular model we can also see what files there are.

files = api.list_repo_files(all_models[0].modelId)files[ '.gitattributes', 'README.md', 'added_tokens.json', 'config.json', 'flax_model.msgpack', 'pytorch_model.bin', 'special_tokens_map.json', 'tf_model.h5', 'tokenizer.json', 'tokenizer_config.json', 'vocab.txt' ]

Filtering

To limit the scope of this blog post, we’ll focus only on Pytorch models and ‘text classification’ models. The metadata about the model type is likely usually pretty reliable. The model task metadata, on the other hand, is not always reliable in my experience. This means we probably have some models that aren’t text-classification models and don’t include some actual text classification models in our dataset. For now, we won’t worry too much about this.

from huggingface_hub import ModelSearchArgumentsmodel_args = ModelSearchArguments()from huggingface_hub import ModelFilter

model_filter = ModelFilter(

task=model_args.pipeline_tag.TextClassification,

library=model_args.library.PyTorch

)

api.list_models(filter=model_filter)[0]ModelInfo: { modelId: distilbert-base-uncased-finetuned-sst-2-english sha: 00c3f1ef306e837efb641eaca05d24d161d9513c lastModified: 2022-07-22T08:00:55.000Z tags: ['pytorch', 'tf', 'rust', 'distilbert', 'text-classification', 'en', 'dataset:sst2', 'dataset:glue', 'transformers', 'license:apache-2.0', 'model-index'] pipeline_tag: text-classification siblings: [RepoFile(rfilename='.gitattributes'), RepoFile(rfilename='README.md'), RepoFile(rfilename='config.json'), RepoFile(rfilename='map.jpeg'), RepoFile(rfilename='pytorch_model.bin'), RepoFile(rfilename='rust_model.ot'), RepoFile(rfilename='tf_model.h5'), RepoFile(rfilename='tokenizer_config.json'), RepoFile(rfilename='vocab.txt')] private: False author: None config: None id: distilbert-base-uncased-finetuned-sst-2-english downloads: 5185721 likes: 76 library_name: transformers }

Now we have a filter we’ll use that to grab all the models that match this filter.

all_models = api.list_models(filter=model_filter)all_models[0]ModelInfo: { modelId: distilbert-base-uncased-finetuned-sst-2-english sha: 00c3f1ef306e837efb641eaca05d24d161d9513c lastModified: 2022-07-22T08:00:55.000Z tags: ['pytorch', 'tf', 'rust', 'distilbert', 'text-classification', 'en', 'dataset:sst2', 'dataset:glue', 'transformers', 'license:apache-2.0', 'model-index'] pipeline_tag: text-classification siblings: [RepoFile(rfilename='.gitattributes'), RepoFile(rfilename='README.md'), RepoFile(rfilename='config.json'), RepoFile(rfilename='map.jpeg'), RepoFile(rfilename='pytorch_model.bin'), RepoFile(rfilename='rust_model.ot'), RepoFile(rfilename='tf_model.h5'), RepoFile(rfilename='tokenizer_config.json'), RepoFile(rfilename='vocab.txt')] private: False author: None config: None id: distilbert-base-uncased-finetuned-sst-2-english downloads: 5185721 likes: 76 library_name: transformers }

Let’s see how many models that gives us.

len(all_models)6860

Later on, in this blog, we’ll want to work with the config.json files (we’ll get back to why later!), so we’ll quickly check that all our models have this.

def has_config(model):

has_config = False

files = model.siblings

for file in files:

if "config.json" in file.rfilename:

has_config = True

return has_config

else:

continuehas_config(all_models[0])True

has_config = [model for model in all_models if has_config(model)]Let’s check how many we have now

len(has_config)6858

We can also download a particular file from the hub

from huggingface_hub import hf_hub_download

file = hf_hub_download(repo_id=all_models[0].modelId, filename="config.json")file'/root/.cache/huggingface/hub/models--distilbert-base-uncased-finetuned-sst-2-english/snapshots/00c3f1ef306e837efb641eaca05d24d161d9513c/config.json'

Unable to display output for mime type(s): application/vnd.google.colaboratory.intrinsic+jsonimport json

with open(file) as f:

data = json.load(f)data{ 'activation': 'gelu', 'architectures': ['DistilBertForSequenceClassification'], 'attention_dropout': 0.1, 'dim': 768, 'dropout': 0.1, 'finetuning_task': 'sst-2', 'hidden_dim': 3072, 'id2label': {'0': 'NEGATIVE', '1': 'POSITIVE'}, 'initializer_range': 0.02, 'label2id': {'NEGATIVE': 0, 'POSITIVE': 1}, 'max_position_embeddings': 512, 'model_type': 'distilbert', 'n_heads': 12, 'n_layers': 6, 'output_past': True, 'pad_token_id': 0, 'qa_dropout': 0.1, 'seq_classif_dropout': 0.2, 'sinusoidal_pos_embds': False, 'tie_weights_': True, 'vocab_size': 30522 }

We can also check if the model has a README.md

def has_file_in_repo(model,file_name):

has_file = False

files = model.siblings

for file in files:

if file_name in file.rfilename:

has_file = True

return has_file

else:

continue has_file_in_repo(has_config[0],'README.md')True

has_readme = [model for model in has_config if has_file_in_repo(model,"README.md")]We can see that there are more configs than READMEs

len(has_readme)3482

len(has_config)6858

We now write some functions to grab both the README.md and config.json files from the hub.

from requests.exceptions import JSONDecodeError

import concurrent.futures@lru_cache(maxsize=None)

def get_model_labels(model):

try:

url = hf_hub_url(repo_id=model.modelId, filename="config.json")

return model.modelId, list(requests.get(url).json()['label2id'].keys())

except (KeyError, JSONDecodeError, AttributeError):

return model.modelId, None

get_model_labels(has_config[0])('distilbert-base-uncased-finetuned-sst-2-english', ['NEGATIVE', 'POSITIVE'])

def get_model_readme(model):

url = hf_hub_url(repo_id=model.modelId, filename="README.md")

return requests.get(url).textdef get_data(model):

readme = get_model_readme(model)

_, labels = get_model_labels(model)

return model.modelId, labels, readmeSince this takes a little while we make a progress bar and do this using multiple threads

from tqdm.auto import tqdmwith tqdm(total=len(has_config)) as progress:

with concurrent.futures.ThreadPoolExecutor() as e:

tasks = []

for model in has_config:

future = e.submit(get_data, model)

future.add_done_callback(lambda p: progress.update())

tasks.append(future)

results = [task.result() for task in tasks]Load our data using Pandas.

import pandas as pddf = pd.DataFrame(results,columns=['modelId','label','readme'])df| modelId | label | readme | |

|---|---|---|---|

| 0 | distilbert-base-uncased-finetuned-sst-2-english | [NEGATIVE, POSITIVE] | ---\nlanguage: en\nlicense: apache-2.0\ndatase... |

| 1 | cross-encoder/ms-marco-MiniLM-L-12-v2 | [LABEL_0] | ---\nlicense: apache-2.0\n---\n# Cross-Encoder... |

| 2 | cardiffnlp/twitter-xlm-roberta-base-sentiment | [Negative, Neutral, Positive] | ---\nlanguage: multilingual\nwidget:\n- text: ... |

| 3 | facebook/bart-large-mnli | [contradiction, entailment, neutral] | ---\nlicense: mit\nthumbnail: https://huggingf... |

| 4 | ProsusAI/finbert | [positive, negative, neutral] | ---\nlanguage: "en"\ntags:\n- financial-sentim... |

| ... | ... | ... | ... |

| 6845 | jinwooChoi/SKKU_AP_SA_KBT6 | [LABEL_0, LABEL_1, LABEL_2] | Entry not found |

| 6846 | jinwooChoi/SKKU_AP_SA_KBT7 | [LABEL_0, LABEL_1, LABEL_2] | Entry not found |

| 6847 | naem1023/electra-phrase-clause-classification-... | None | Entry not found |

| 6848 | naem1023/electra-phrase-clause-classification-... | None | ---\nlicense: apache-2.0\n---\n |

| 6849 | YYAH/Bert_Roman_Urdu | [LABEL_0, LABEL_1, LABEL_2, LABEL_3] | ---\nlicense: unknown\n---\n |

6850 rows × 3 columns

You can see we now have a DataFrame containing the modelID, the model labels and the README.md for each model (where it exists).

Since the README.md (the model card) is the obvious source of information about a model we’ll start here. One question we may have is how long our the README.md is. Some models have very detailed model cards whilst others have very little information in the model card. We can get a bit of a sense of this by looking at the range of README.md lenghts:

df['readme'].apply(len).describe()count 6850.000000 mean 1009.164818 std 1750.509155 min 0.000000 25% 15.000000 50% 20.500000 75% 1736.000000 max 56172.000000 Name: readme, dtype: float64

We might want to filter on the length of the README so we’ll store that info in a new column.

df['readme_len'] = df['readme'].apply(len)Since we might want to work with this data again, let’s load it into a datasets Dataset and use push_to_hub to store a copy.

from datasets import Datasetds = Dataset.from_pandas(df)

dsDataset({ features: ['modelId', 'label', 'readme', 'readme_len'], num_rows: 6850 })

from huggingface_hub import notebook_loginnotebook_login()Login successful

Your token has been saved to /root/.huggingface/tokends.push_to_hub('davanstrien/hf_model_metadata')/usr/local/lib/python3.7/dist-packages/huggingface_hub/hf_api.py:1951: FutureWarning: `identical_ok` has no effect and is deprecated. It will be removed in 0.11.0.

FutureWarning,We can now load it again using load_dataset.

from datasets import load_datasetds = load_dataset('davanstrien/hf_model_metadata', split='train')Using custom data configuration davanstrien--hf_model_metadata-019f1ad4bdf705b5Downloading and preparing dataset None/None (download: 3.71 MiB, generated: 10.64 MiB, post-processed: Unknown size, total: 14.35 MiB) to /root/.cache/huggingface/datasets/davanstrien___parquet/davanstrien--hf_model_metadata-019f1ad4bdf705b5/0.0.0/2a3b91fbd88a2c90d1dbbb32b460cf621d31bd5b05b934492fdef7d8d6f236ec...Dataset parquet downloaded and prepared to /root/.cache/huggingface/datasets/davanstrien___parquet/davanstrien--hf_model_metadata-019f1ad4bdf705b5/0.0.0/2a3b91fbd88a2c90d1dbbb32b460cf621d31bd5b05b934492fdef7d8d6f236ec. Subsequent calls will reuse this data.Clean up some memory…

del dfSemantic search of model cards

We now get to the main point of all of this. Can we use semantic search to try and find models of interest? For this, we’ll use the sentence-transformers library. This blog won’t cover all the background of this library. The docs give a helpful overview and some tutorials.

To start, we’ll see if we can search using the information in the README.md. This should, in theory, contain data that might be similar to the kinds of things we want to search for when finding candidate models. We might prefer to use semantic search over an exact match because the terms we use might be different, or there is a related concept/model that might be close enough to make it worthwhile for fine-tuning.

First, we import the SentenceTransformer class and some util functions.

from sentence_transformers import SentenceTransformer, utilWe’ll now download an embedding model. There are many we could choose from but since we’re just trying things out at the moment we won’t stress about the particular model we use here.

model = SentenceTransformer('all-MiniLM-L6-v2')Let’s start on longer README’s, here i mean a long readme that is just not super short…

ds_longer_readmes = ds.filter(lambda x: x['readme_len']>100)We now create embeddings for the readme column and store this in a new embedding column

def encode_readme(readme):

return model.encode(readme,device='cuda')ds_with_embeddings = ds_longer_readmes.map(lambda example:

{"embedding":encode_readme(example['readme'])},batched=True, batch_size=16)

ds_with_embeddingsDataset({ features: ['modelId', 'label', 'readme', 'readme_len', 'embedding'], num_rows: 3284 })

We can now use the add_fais_index to create an index which allows us to efficiently query these embeddings

ds_with_embeddings.add_faiss_index(column='embedding')Dataset({ features: ['modelId', 'label', 'readme', 'readme_len', 'embedding'], num_rows: 3284 })

Similar models

To start, we’ll take a readme for a model and see how well the model performs on finding similar models.

query_readme = ds_with_embeddings[35]['readme']print(query_readme)# Twitter-roBERTa-base for Irony Detection This is a roBERTa-base model trained on ~58M tweets and finetuned for irony detection with the TweetEval benchmark. - Paper: [_TweetEval_ benchmark (Findings of EMNLP 2020)](https://arxiv.org/pdf/2010.12421.pdf). - Git Repo: [Tweeteval official repository](https://github.com/cardiffnlp/tweeteval). ## Example of classification ```python from transformers import AutoModelForSequenceClassification from transformers import TFAutoModelForSequenceClassification from transformers import AutoTokenizer import numpy as np from scipy.special import softmax import csv import urllib.request # Preprocess text (username and link placeholders) def preprocess(text): new_text = [ ] for t in text.split(" "): t = '@user' if t.startswith('@') and len(t) > 1 else t t = 'http' if t.startswith('http') else t new_text.append(t) return " ".join(new_text) # Tasks: # emoji, emotion, hate, irony, offensive, sentiment # stance/abortion, stance/atheism, stance/climate, stance/feminist, stance/hillary task='irony' MODEL = f"cardiffnlp/twitter-roberta-base-{task}" tokenizer = AutoTokenizer.from_pretrained(MODEL) # download label mapping labels=[] mapping_link = f"https://raw.githubusercontent.com/cardiffnlp/tweeteval/main/datasets/{task}/mapping.txt" with urllib.request.urlopen(mapping_link) as f: html = f.read().decode('utf-8').split("\n") csvreader = csv.reader(html, delimiter='\t') labels = [row[1] for row in csvreader if len(row) > 1] # PT model = AutoModelForSequenceClassification.from_pretrained(MODEL) model.save_pretrained(MODEL) text = "Great, it broke the first day..." text = preprocess(text) encoded_input = tokenizer(text, return_tensors='pt') output = model(**encoded_input) scores = output[0][0].detach().numpy() scores = softmax(scores) # # TF # model = TFAutoModelForSequenceClassification.from_pretrained(MODEL) # model.save_pretrained(MODEL) # text = "Great, it broke the first day..." # encoded_input = tokenizer(text, return_tensors='tf') # output = model(encoded_input) # scores = output[0][0].numpy() # scores = softmax(scores) ranking = np.argsort(scores) ranking = ranking[::-1] for i in range(scores.shape[0]): l = labels[ranking] s = scores[ranking] print(f"{i+1}) {l} {np.round(float(s), 4)}") ``` Output: ``` 1) irony 0.914 2) non_irony 0.086 ```

We pass this README into the model we used to create our embedding. This creates a query embedding for this README.

q = model.encode(query_readme)We can use get_nearest_examples to look for the most similar results to this query.

scores, retrieved_examples = ds_with_embeddings.get_nearest_examples('embedding', q, k=10)Let’s take a look at the first result

print(retrieved_examples['modelId'][0])cardiffnlp/twitter-roberta-base-irony

print(retrieved_examples["readme"][0])# Twitter-roBERTa-base for Irony Detection This is a roBERTa-base model trained on ~58M tweets and finetuned for irony detection with the TweetEval benchmark. - Paper: [_TweetEval_ benchmark (Findings of EMNLP 2020)](https://arxiv.org/pdf/2010.12421.pdf). - Git Repo: [Tweeteval official repository](https://github.com/cardiffnlp/tweeteval). ## Example of classification ```python from transformers import AutoModelForSequenceClassification from transformers import TFAutoModelForSequenceClassification from transformers import AutoTokenizer import numpy as np from scipy.special import softmax import csv import urllib.request # Preprocess text (username and link placeholders) def preprocess(text): new_text = [ ] for t in text.split(" "): t = '@user' if t.startswith('@') and len(t) > 1 else t t = 'http' if t.startswith('http') else t new_text.append(t) return " ".join(new_text) # Tasks: # emoji, emotion, hate, irony, offensive, sentiment # stance/abortion, stance/atheism, stance/climate, stance/feminist, stance/hillary task='irony' MODEL = f"cardiffnlp/twitter-roberta-base-{task}" tokenizer = AutoTokenizer.from_pretrained(MODEL) # download label mapping labels=[] mapping_link = f"https://raw.githubusercontent.com/cardiffnlp/tweeteval/main/datasets/{task}/mapping.txt" with urllib.request.urlopen(mapping_link) as f: html = f.read().decode('utf-8').split("\n") csvreader = csv.reader(html, delimiter='\t') labels = [row[1] for row in csvreader if len(row) > 1] # PT model = AutoModelForSequenceClassification.from_pretrained(MODEL) model.save_pretrained(MODEL) text = "Great, it broke the first day..." text = preprocess(text) encoded_input = tokenizer(text, return_tensors='pt') output = model(**encoded_input) scores = output[0][0].detach().numpy() scores = softmax(scores) # # TF # model = TFAutoModelForSequenceClassification.from_pretrained(MODEL) # model.save_pretrained(MODEL) # text = "Great, it broke the first day..." # encoded_input = tokenizer(text, return_tensors='tf') # output = model(encoded_input) # scores = output[0][0].numpy() # scores = softmax(scores) ranking = np.argsort(scores) ranking = ranking[::-1] for i in range(scores.shape[0]): l = labels[ranking] s = scores[ranking] print(f"{i+1}) {l} {np.round(float(s), 4)}") ``` Output: ``` 1) irony 0.914 2) non_irony 0.086 ```

and a lower similarity result

print(retrieved_examples["readme"][9])--- language: "en" tags: - roberta - sentiment - twitter widget: - text: "Oh no. This is bad.." - text: "To be or not to be." - text: "Oh Happy Day" --- This RoBERTa-based model can classify the sentiment of English language text in 3 classes: - positive 😀 - neutral 😐 - negative 🙁 The model was fine-tuned on 5,304 manually annotated social media posts. The hold-out accuracy is 86.1%. For details on the training approach see Web Appendix F in Hartmann et al. (2021). # Application ```python from transformers import pipeline classifier = pipeline("text-classification", model="j-hartmann/sentiment-roberta-large-english-3-classes", return_all_scores=True) classifier("This is so nice!") ``` ```python Output: [[{'label': 'negative', 'score': 0.00016451838018838316}, {'label': 'neutral', 'score': 0.000174045650055632}, {'label': 'positive', 'score': 0.9996614456176758}]] ``` # Reference Please cite (https://journals.sagepub.com/doi/full/10.1177/00222437211037258) when you use our model. Feel free to reach out to (mailto:j.p.hartmann@rug.nl) with any questions or feedback you may have. ``` @article{hartmann2021, title={The Power of Brand Selfies}, author={Hartmann, Jochen and Heitmann, Mark and Schamp, Christina and Netzer, Oded}, journal={Journal of Marketing Research} year={2021} } ```

The results seem pretty reasonable; the first result appears to be a duplicate. The lower result is for a slightly different task using social media data.

Searching

Being able to find similar model cards is a start but we actually wanted to be able to search directly. Let’s see how our results do if we instead search for some terms we might use to try and find suitable models.

q = model.encode("fake news")scores, retrieved_examples = ds_with_embeddings.get_nearest_examples('embedding', q, k=10)print(retrieved_examples["readme"][0])This model is fined tuned for the Fake news classifier: Train a text classification model to detect fake news articles. Base on the Kaggle dataset(https://www.kaggle.com/clmentbisaillon/fake-and-real-news-dataset).

print(retrieved_examples["readme"][1])Fake news classifier This model trains a text classification model to detect fake news articles, it uses distilbert-base-uncased-finetuned-sst-2-english pretrained model to work on fake and real news dataset from kaggle (https://www.kaggle.com/clmentbisaillon/fake-and-real-news-dataset)

print(retrieved_examples["readme"][2])--- license: mit --- # Fake and real news classification task Model : [DistilRoBERTa base model](https://huggingface.co/distilroberta-base) Dataset : [Fake and real news dataset](https://www.kaggle.com/datasets/clmentbisaillon/fake-and-real-news-dataset)

Not a bad start. Let’s try another one

q = model.encode("financial sentiment")

scores, retrieved_examples = ds_with_embeddings.get_nearest_examples('embedding', q, k=10)

print(retrieved_examples["readme"][0])--- language: en tags: - financial-sentiment-analysis - sentiment-analysis datasets: - financial_phrasebank widget: - text: Operating profit rose to EUR 13.1 mn from EUR 8.7 mn in the corresponding period in 2007 representing 7.7 % of net sales. - text: Bids or offers include at least 1,000 shares and the value of the shares must correspond to at least EUR 4,000. - text: Raute reported a loss per share of EUR 0.86 for the first half of 2009 , against EPS of EUR 0.74 in the corresponding period of 2008. --- ### FinancialBERT for Sentiment Analysis [*FinancialBERT*](https://huggingface.co/ahmedrachid/FinancialBERT) is a BERT model pre-trained on a large corpora of financial texts. The purpose is to enhance financial NLP research and practice in financial domain, hoping that financial practitioners and researchers can benefit from this model without the necessity of the significant computational resources required to train the model. The model was fine-tuned for Sentiment Analysis task on _Financial PhraseBank_ dataset. Experiments show that this model outperforms the general BERT and other financial domain-specific models. More details on `FinancialBERT`'s pre-training process can be found at: https://www.researchgate.net/publication/358284785_FinancialBERT_-_A_Pretrained_Language_Model_for_Financial_Text_M ining ### Training data FinancialBERT model was fine-tuned on [Financial PhraseBank](https://www.researchgate.net/publication/251231364_FinancialPhraseBank-v10), a dataset consisting of 4840 Financial News categorised by sentiment (negative, neutral, positive). ### Fine-tuning hyper-parameters - learning_rate = 2e-5 - batch_size = 32 - max_seq_length = 512 - num_train_epochs = 5 ### Evaluation metrics The evaluation metrics used are: Precision, Recall and F1-score. The following is the classification report on the test set. | sentiment | precision | recall | f1-score | support | | ------------- |:-------------:|:-------------:|:-------------:| -----:| | negative | 0.96 | 0.97 | 0.97 | 58 | | neutral | 0.98 | 0.99 | 0.98 | 279 | | positive | 0.98 | 0.97 | 0.97 | 148 | | macro avg | 0.97 | 0.98 | 0.98 | 485 | | weighted avg | 0.98 | 0.98 | 0.98 | 485 | ### How to use The model can be used thanks to Transformers pipeline for sentiment analysis. ```python from transformers import BertTokenizer, BertForSequenceClassification from transformers import pipeline model = BertForSequenceClassification.from_pretrained("ahmedrachid/FinancialBERT-Sentiment-Analysis",num_labels=3) tokenizer = BertTokenizer.from_pretrained("ahmedrachid/FinancialBERT-Sentiment-Analysis") nlp = pipeline("sentiment-analysis", model=model, tokenizer=tokenizer) sentences = ["Operating profit rose to EUR 13.1 mn from EUR 8.7 mn in the corresponding period in 2007 representing 7.7 % of net sales.", "Bids or offers include at least 1,000 shares and the value of the shares must correspond to at least EUR 4,000.", "Raute reported a loss per share of EUR 0.86 for the first half of 2009 , against EPS of EUR 0.74 in the corresponding period of 2008.", ] results = nlp(sentences) print(results) [{'label': 'positive', 'score': 0.9998133778572083}, {'label': 'neutral', 'score': 0.9997822642326355}, {'label': 'negative', 'score': 0.9877365231513977}] ``` > Created by [Ahmed Rachid Hazourli](https://www.linkedin.com/in/ahmed-rachid/)

print(retrieved_examples["readme"][1])--- language: "en" tags: - financial-sentiment-analysis - sentiment-analysis widget: - text: "Stocks rallied and the British pound gained." --- FinBERT is a pre-trained NLP model to analyze sentiment of financial text. It is built by further training the BERT language model in the finance domain, using a large financial corpus and thereby fine-tuning it for financial sentiment classification. [Financial PhraseBank](https://www.researchgate.net/publication/251231107_Good_Debt_or_Bad_Debt_Detecting_Semantic_Orientation s_in_Economic_Texts) by Malo et al. (2014) is used for fine-tuning. For more details, please see the paper [FinBERT: Financial Sentiment Analysis with Pre-trained Language Models](https://arxiv.org/abs/1908.10063) and our related (https://medium.com/prosus-ai-tech-blog/finbert-financial-sentiment-analysis-with-bert-b277a3607101) on Medium. The model will give softmax outputs for three labels: positive, negative or neutral. --- About Prosus Prosus is a global consumer internet group and one of the largest technology investors in the world. Operating and investing globally in markets with long-term growth potential, Prosus builds leading consumer internet companies that empower people and enrich communities. For more information, please visit www.prosus.com. Contact information Please contact Dogu Araci dogu.araciprosuscom and Zulkuf Genc zulkuf.gencprosuscom about any FinBERT related issues and questions.

print(retrieved_examples["readme"][9])--- license: apache-2.0 tags: - Finance-sentiment-analysis - generated_from_trainer metrics: - f1 - accuracy - precision - recall model-index: - name: bert-base-finance-sentiment-noisy-search results: [] widget: - text: "Third quarter reported revenues were $10.9 billion, up 5 percent compared to prior year and up 8 percent on a currency-neutral basis" example_title: "Positive" - text: "The London-listed website for businesses reported a pretax loss of $26.6 million compared with a loss of $12.9 million the previous year" example_title: "Negative" - text: "Microsoft updates Outlook, Teams, and PowerPoint to be hybrid work ready" example_title: "Neutral" --- <!-- This model card has been generated automatically according to the information the Trainer had access to. You should probably proofread and complete it, then remove this comment. --> # bert-base-finance-sentiment-noisy-search This model is a fine-tuned version of (https://huggingface.co/bert-base-uncased) on Kaggle finance news sentiment analysis with data enhancement using noisy search. The process is explained below: 1. First "bert-base-uncased" was fine-tuned on Kaggle's finance news sentiment analysis https://www.kaggle.com/ankurzing/sentiment-analysis-for-financial-news dataset achieving accuracy of about 88% 2. We then used a logistic-regression classifier on the same data. Here we looked at coefficients that contributed the most to the "Positive" and "Negative" classes by inspecting only bi-grams. 3. Using the top 25 bi-grams per class (i.e. "Positive" / "Negative") we invoked Bing news search with those bi-grams and retrieved up to 50 news items per bi-gram phrase. 4. We called it "noisy-search" because it is assumed the positive bi-grams (e.g. "profit rose" , "growth net") give rise to positive examples whereas negative bi-grams (e.g. "loss increase", "share loss") result in negative examples but note that we didn't test for the validity of this assumption (hence: noisy-search) 5. For each article we kept the title + excerpt and labeled it according to pre-assumptions on class associations. 6. We then trained the same model on the noisy data and apply it to an held-out test set from the original data set split. 7. Training with couple of thousands noisy "positives" and "negatives" examples yielded a test set accuracy of about 95%. 8. It shows that by automatically collecting noisy examples using search we can boost accuracy performance from about 88% to more than 95%. Accuracy results for Logistic Regression (LR) and BERT (base-cased) are shown in the attached pdf: https://drive.google.com/file/d/1MI9gRdppactVZ_XvhCwvoaOV1aRfprrd/view?usp=sharing ## Model description BERT model trained on noisy data from search results. See PDF for more details. ## Intended uses & limitations Intended for use on finance news sentiment analysis with 3 options: "Positive", "Neutral" and "Negative" To get the best results feed the classifier with the title and either the 1st paragraph or a short news summarization e.g. of up to 64 tokens. ### Training hyperparameters The following hyperparameters were used during training: - learning_rate: 5e-05 - train_batch_size: 8 - eval_batch_size: 8 - seed: 42 - optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08 - lr_scheduler_type: linear - num_epochs: 5 ### Framework versions - Transformers 4.16.2 - Pytorch 1.10.0+cu111 - Datasets 1.18.3 - Tokenizers 0.11.0

These seem like a good starting point. However, we have a few issues relying on model cards alone. Firstly a lot of models don’t include them and the quality of them can be mixed. It’s maybe a question if we want to use a model that has no model card at all but it is possible that despite a good model card we don’t capture everything we’d need for searching in the README.

Can we search using model labels?

We’re only working with classification models in this case. For most Pytorch models on the hub, we have a config file. This config usually contains the model’s labels. For example, ‘positive’, ‘negative’.

Maybe instead of relying only on the metadata, we can search ‘inside’ the model. The labels will often be a helpful reflection of what we’re looking for. For example, we want to find a sentiment classification model that roughly puts text into positive or negative sentiment. Again, relying on exact label matches may not work well, but maybe embeddings get around this problem. Let’s try it out!

Let’s look at an example label.

ds[0]['label']['NEGATIVE', 'POSITIVE']

Since we’re expecting labels to match this format lets filter out any that don’t fit this structure.

ds = ds.filter(lambda example: isinstance(example['label'],list))How to create embeddings for our labels?

How should we encode our labels? At the moment, we have a list of labels. One option would be to create an embedding for every single label, which will require us to query multiple embeddings to check for a match. We may also prefer intuatively to have an embedding for the combination of labels. This is because we probably know more about the model type from all its labels rather than looking at one label at a time. We’ll deal with the labels very crudely by joining them on , and creating a single string out of all the labels. I’m sure this isn’t the best possible approach, but it might be a good place to start testing this idea.

ds = ds.map(lambda example: {"string_label": ",".join(example['label'])})dsDataset({ features: ['modelId', 'label', 'readme', 'readme_len', 'string_label'], num_rows: 4175 })

ds_with_embeddings = ds.map(lambda example:

{"label_embedding":encode_readme(example['string_label'])},batched=True, batch_size=16)

ds_with_embeddingsDataset({ features: ['modelId', 'label', 'readme', 'readme_len', 'string_label', 'label_embedding'], num_rows: 4175 })

Searching with labels

Now we have some embeddings for the labels, let’s try searching. Let’s start with an existing set of labels to see how well we can match those.

ds_with_embeddings[0]['string_label']'NEGATIVE,POSITIVE'

Unable to display output for mime type(s): application/vnd.google.colaboratory.intrinsic+jsonq = model.encode("negative")ds_with_embeddings.add_faiss_index(column='label_embedding')Dataset({ features: ['modelId', 'label', 'readme', 'readme_len', 'string_label', 'label_embedding'], num_rows: 4175 })

scores, retrieved_examples = ds_with_embeddings.get_nearest_examples('label_embedding', q, k=10)retrieved_examples['label'][:10][ ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'], ['negative', 'positive'] ]

So far, these results look pretty good, although we haven’t done anything we couldn’t do with simple string matching. Let’s see what happens if we use a slightly more abstract search.

q = model.encode("music")scores, retrieved_examples = ds_with_embeddings.get_nearest_examples('label_embedding', q, k=10)retrieved_examples['label'][:10][ ['Dance', 'Heavy Metal', 'Hip Hop', 'Indie', 'Pop', 'Rock'], ['Dance', 'Heavy Metal', 'Hip Hop', 'Indie', 'Pop', 'Rock'], ['Dance', 'Heavy Metal', 'Hip Hop', 'Indie', 'Pop', 'Rock'], [ 'Alternative', 'Country', 'Eletronic Music', 'Gospel and Worship Songs', 'Hip-Hop', 'Jazz/Blues', 'Pop', 'R&B/Soul', 'Reggae', 'Rock' ], ['business', 'entertainment', 'sports'], ['_silence_', '_unknown_', 'down', 'go', 'left', 'no', 'off', 'on', 'right', 'stop', 'up', 'yes'], ['angry', 'happy', 'others', 'sad'], ['Feeling', 'Thinking'], [ 'am_thuc', 'bong_da', 'cho_thue', 'doi_song', 'dong_vat', 'mua_ban', 'nhac', 'phim', 'phu_kien', 'sach', 'showbiz', 'the_thao', 'thoi_trang_nam', 'thoi_trang_nu', 'thuc_vat', 'tin_bds', 'tin_tuc', 'tri_thuc' ], ['intimacy'] ]

We can see that we get back labels related to music genre: ['Dance', 'Heavy Metal', 'Hip Hop', 'Indie', 'Pop', 'Rock'], for our first four results. After that, we get back ['business', 'entertainment', 'sports'], which might not be too far off what we want if we searched for music.

How about another search term

q = model.encode("hateful")scores, retrieved_examples = ds_with_embeddings.get_nearest_examples('label_embedding', q, k=10)retrieved_examples['label'][:10][ ['Hateful', 'Not hateful'], ['Hateful', 'Not hateful'], ['hateful', 'non-hateful'], ['hateful', 'non-hateful'], ['hateful', 'non-hateful'], ['HATE', 'NOT_HATE'], ['NON_HATE', 'HATE'], ['NON_HATE', 'HATE'], ['NON_HATE', 'HATE'], ['NON_HATE', 'HATE'] ]

Again here we have something quite close to what we’d get with string matching, but we have a bit more flexibility in how we spell/define our labels which might help surface more possible results.

We’ll try a bunch more things…

def query_labels(query:str):

q = model.encode(query)

scores, retrieved_examples = ds_with_embeddings.get_nearest_examples('label_embedding', q, k=10)

print(f"results for: {query}")

print(list(zip(retrieved_examples['label'][:10],retrieved_examples['modelId'][:10])))query_labels("politics")results for: politics

[ (['Democrat', 'Republican'], 'm-newhauser/distilbert-political-tweets'), (['Geopolitical', 'Personal', 'Political', 'Religious'], 'dee4hf/autotrain-deephate2-1093539673'), (['None', 'Environmental', 'Social', 'Governance'], 'yiyanghkust/finbert-esg'), (['business', 'entertainment', 'sports'], 'bipin/malayalam-news-classifier'), ( ['CRIME', 'ENTERTAINMENT', 'Finance', 'POLITICS', 'SPORTS', 'Terrorism'], 'Yarn007/autotrain-Napkin-872827783' ), (['business', 'entertainment', 'politics', 'sport', 'tech'], 'abhishek/autonlp-bbc-roberta-37249301'), ( ['business', 'entertainment', 'politics', 'sport', 'tech'], 'abhishek/autonlp-bbc-news-classification-37229289' ), (['business', 'entertainment', 'politics', 'sport', 'tech'], 'Yarn/autotrain-Traimn-853827191'), (['Neutral', 'Propaganda'], 'Real29/my-model-proppy'), (['Neutral', 'Propaganda'], 'Real29/my-model-ptc') ]

query_labels("fiction, non_fiction")results for: fiction, non_fiction

[ ( ['action', 'drama', 'horror', 'sci_fi', 'superhero', 'thriller'], 'Tejas3/distillbert_110_uncased_movie_genre' ), (['action', 'drama', 'horror', 'sci_fi', 'superhero', 'thriller'], 'Tejas3/distillbert_110_uncased_v1'), ( ['action', 'animation', 'comedy', 'drama', 'romance', 'thriller'], 'langfab/distilbert-base-uncased-finetuned-movie-genre' ), (['HATE', 'NON_HATE'], 'anthonny/dehatebert-mono-spanish-finetuned-sentiments_reviews_politicos'), (['NON_HATE', 'HATE'], 'Hate-speech-CNERG/dehatebert-mono-english'), (['NON_HATE', 'HATE'], 'Hate-speech-CNERG/dehatebert-mono-german'), (['NON_HATE', 'HATE'], 'Hate-speech-CNERG/dehatebert-mono-italian'), (['NON_HATE', 'HATE'], 'Hate-speech-CNERG/dehatebert-mono-spanish'), (['NON_HATE', 'HATE'], 'Hate-speech-CNERG/dehatebert-mono-portugese'), (['NON_HATE', 'HATE'], 'Hate-speech-CNERG/dehatebert-mono-polish') ]

Let’s try the set of emotions one should feel everyday.

query_labels("worry, disgust, anxiety, fear")results for: worry, disgust, anxiety, fear

[ (['anger', 'disgust', 'fear', 'guilt', 'joy', 'sadness', 'shame'], 'crcb/isear_bert'), ( ['anger', 'disgust', 'fear', 'joy', 'others', 'sadness', 'surprise'], 'pysentimiento/robertuito-emotion-analysis' ), ( ['anger', 'disgust', 'fear', 'joy', 'others', 'sadness', 'surprise'], 'daveni/twitter-xlm-roberta-emotion-es' ), ( ['anger', 'disgust', 'fear', 'joy', 'others', 'sadness', 'surprise'], 'finiteautomata/beto-emotion-analysis' ), ( ['anger', 'disgust', 'fear', 'joy', 'others', 'sadness', 'surprise'], 'finiteautomata/bertweet-base-emotion-analysis' ), (['ANGER', 'DISGUST', 'FEAR', 'HAPPINESS', 'NEUTRALITY', 'SADNESS', 'SURPRISED'], 'Gunulhona/tbecmodel'), ( ['anger', 'anticipation', 'disgust', 'fear', 'joy', 'sadness', 'surprise', 'trust'], 'Yuetian/bert-base-uncased-finetuned-plutchik-emotion' ), (['anger', 'fear', 'happy', 'love', 'sadness'], 'jasonpratamas7/Thesis-Model-1'), (['anger', 'fear', 'happy', 'love', 'sadness'], 'jasonpratamas7/Thesis-Model1'), (['anger', 'fear', 'happy', 'love', 'sadness'], 'StevenLimcorn/indonesian-roberta-base-emotion-classifier') ]

This example of searching for a set of labels might be a better approach in general since the query will better match the format of the intitial search.

Conclusion

It seems like there is some merit in exploring some of these ideas further. There are a lot of improvements that could be made: - how the embeddings are created - removing some ‘noise’ from the README, for example, by first parsing the Markdown - improving how the embeddings are created for the labels - combining the embeddings in some way either upfront or when queryig - a bunch of other things…

If I find some spare time, I plan to dig into these topics a bit further. This is also a nice excuse to play with one of the new open source embedding databases that have popped up in the last couple of years.